Realtime LLM and AI TTS

I wanted to make a quick post about a small single-day project I've been working on.

I was experimenting with Ollama and LLama 3, and I was really surprised by both the speed of generation and accuracy. I was getting near GPT-3.5 levels of accuracy in a fraction of the time, all run locally and for free!

The speed at which this was working got me thinking: assuming I streamed the generated words, I could pipe those words to a Text-to-Speech (TTS) engine. As long as it runs even a bit quicker than real-time, I would have a realtime AI TTS system.

Also, for some reason, G-Man from Half-Life was on my mind, so that's what I set out to recreate!

Let's Get Started!

In this project, I used Ollama to run LLama 3 for text generation, and Coqui XTTS v2 for the TTS.

To start, I wanted to experiment with Llama 3 and Ollama. I did that by going to the Ollama website and running the download script:

curl -fsSL https://ollama.com/install.sh | shAfter waiting a bit, I was ready to go! I then ran the following command to pop into the Ollama chat interface and run some tests:

ollama run llama3After some minutes of waiting for it to download, we could give it some fun prompts!

>>> Tell me a joke!

Here's one:

Why couldn't the bicycle stand up by itself?

(Wait for it...)

Because it was two-tired!

Hope that made you laugh!

>>>

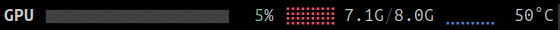

Nice! Bad jokes aside, we had officially downloaded and run our own LLM! All with great speed and still 1GB of VRAM to work with!

Now that all that worked, we needed to see if we could get something up and running in Python, and I wanted to see if I could find a good TTS engine to use. I stumbled upon Coqui XTTS v2, and it was perfect! It was fast, accurate, and I could use any voice I wanted!

So I opened a new Python folder and started setting up my Python environment:

mkdir Projects/PythonProjects/llama_gman

cd Projects/PythonProjects/llama_gman

python3 -m venv .venv # Not including the path for brevity

source .venv/bin/activate

pip install TTS

Then I ran into an error :(. Coqui TTS was only for Python 3.8+ and less than 3.12. I was running 3.12. So I had to downgrade my Python version. Instead of trying to downgrade manually, I decided to download pyenv and use that to manage my Python versions. If you don't have this, I would highly recommend it. It makes managing Python versions a breeze!

I'm not going to go into the setup of pyenv here, but after a couple of commands, we got that up and running, and we could install 3.10.14 and get Coqui TTS working!

pyenv install 3.10

rm -rf .venv

python -m venv .venv

source .venv/bin/activate

pip install TTS

Now we're all set up! Let's write a simple script to see if we can get this to work:

touch test.py

code .

In the file, we're going to write the following from the Coqui TTS documentation:

Running that gave us this funny little output:

Input:

Output:

Truly not bad for 4 seconds of reference audio! It only took about ~0.5 seconds to generate the audio, which is a realtime factor of about 0.3x! Not bad at all!

Next, we had to link Ollama to Python. That was simple enough with the Ollama Python package:

pip install ollamaThen we could write a simple script to get the text from Ollama and pipe it to the TTS engine. First, I wanted to test Ollama to make sure it was working:

Now, I'm not going to lie, I thought I was going to have to run the Ollama server separately or make some code to start it up, but it was all handled in the background. This gave us this output:

$ python ollama_test.py

Hello there! It's great to meet you! I'm happy to chat with you and help with any

questions or topics you'd like to discuss. How's your day going so far?

$

Very nice! That was really simple! Finally, we needed a way to play the audio that was generated. For that, I used the sounddevice library.

With some basic code, we could now play the generated audio! Note: I have some extra code for saving the audio file so I could include it in this post.

I'll admit, it took me way too long to figure out that 48000 / 2 was the sample rate I needed to use. I couldn't find it anywhere in the documentation, but my friend mentioned that most models use 48 kHz. So I just divided by 2, and it worked.

Now, I'm going to do a very basic implementation. Something to note is that the TTS Model is really only good for 2-3 sentences, so I made this little function to split a message up into sentences and generate them:

# Splits input into sentences

def get_sentences(input: str):

sentences = []

sentence = ""

for char in input:

sentence += char

if char in [".", "!", "?"]:

if len(sentence) > 1:

sentences.append(sentence)

else:

sentences[-1] += sentence

sentence = ""

return sentences

Not the prettiest code, but it works. Now I would just take the output and throw it into this function, then pipe it to the TTS engine!

def say_sentence(sentence):

tts_output = tts.tts(sentence.strip(), speaker_wav=sample_file, language="en")

try:

sd.get_status()

sd.wait()

except:

pass

sd.play(tts_output, 48000/2)

print("Audio generated for: ", sentence.strip())

def say_message(message):

sentences = get_sentences(message)

for sentence in sentences:

say_sentence(sentence)

Now this works and all, but if you give it a shot, you notice there's a lot of waiting to generate.

- First, we have to wait for the LLM to generate the text

- Then we have to wait for the TTS to generate the audio

- Then we have to wait for the audio to play

Lots of wasted time, especially when we can do all of this in parallel.

Conveniently, Ollama has a streaming function that we can use to stream the text as it is generated. So I just had to modify the code a bit to get it to work:

def say_stream(response):

message_so_far = ""

final_message = ""

for chunk in response:

message_so_far += chunk['message']['content']

final_message += chunk['message']['content']

# Get sentences

sentences = get_sentences(message_so_far)

if len(sentences) > 0:

for sentence in sentences[:-1]:

print("Playing: ", sentence)

print("_"*50)

message_so_far = message_so_far.replace(sentence, "")

say_sentence(sentence)

say_sentence(message_so_far)

sd.wait()

return final_message

Then we would pipe the output from the LLM to this function:

response = ollama.chat(model="llama3", messages=[{"role": "user", "content": "Tell me a joke!"}], stream=True)

say_stream(response)

And that would fix all of our waiting issues!

Of course, this only says that one line, and G-Man sounds like he is ChatGPT, so we sprinkle in some more code to take input from the user and create a loop so we can keep the conversation going! We also add a system message to make it actually act like G-Man, and we get this script:

Again, it's not the cleanest code, but here's a demo!

As you can see, there are definitely still some glaring issues. The cadence can be off because of the way we split things, and because I was recording at the same time, there was some delay. But it still works quite well. Also, something kind of funny: while recording, my GPU was drawing so much power when it generated that my lights flickered every time it ran. Only a spoonful of power!

Also, keep in mind it's California in the summer with record-breaking heat, so there have been a lot of brownouts recently. I doubt the power is really stable as it is.

Conclusion

Overall, I was really impressed with how well this worked. I was able to get a realtime AI TTS system up and running in a single day, and it was a lot of fun to work on! I would like to maybe turn this into a discord bot that can listen to voice channels and respond in real-time, but that's a project for another day!